Secure AI at Scale Without Compromise

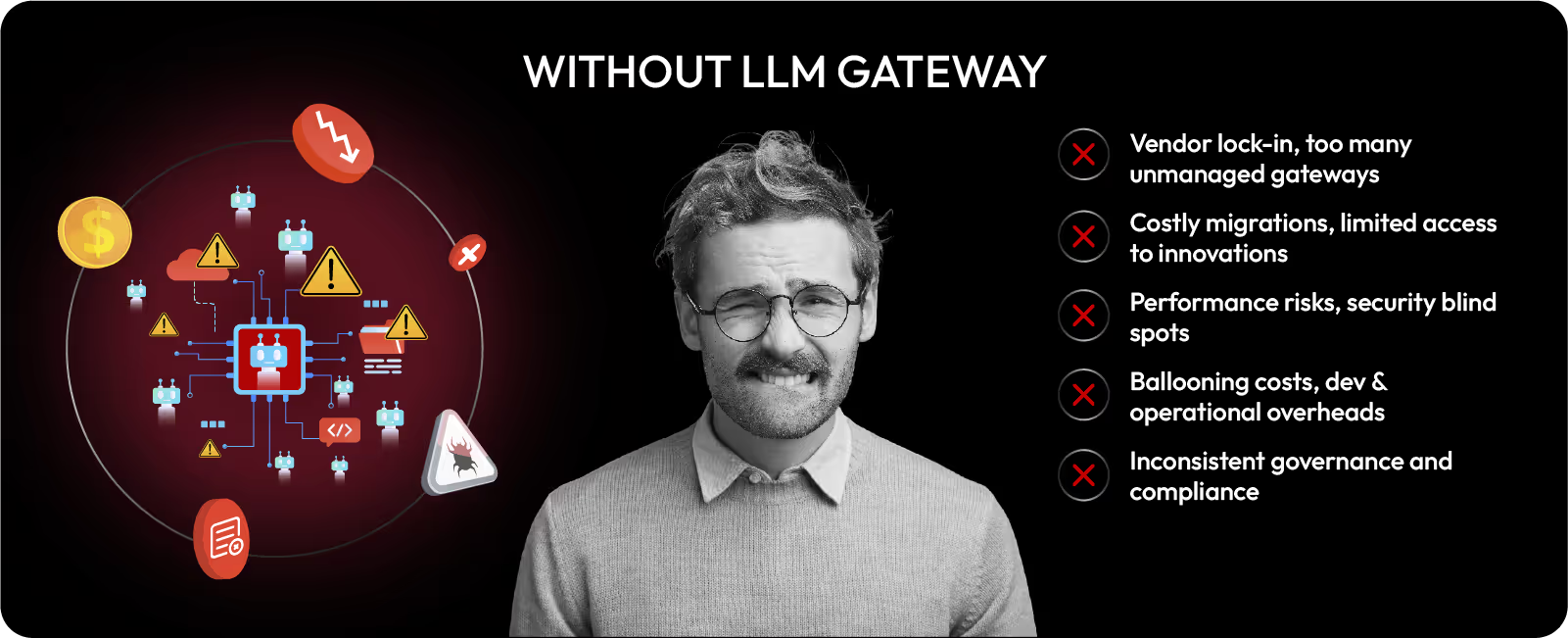

Organizations are deploying AI agents, LLM-powered applications, and generative AI tools at unprecedented speed. Every interaction represents a potential security risk—from prompt injection attacks to inadvertent data leakage of regulated information.

Traditional security tools weren't designed for the unique threat landscape AI creates. Security teams need purpose-built controls that understand AI-specific vulnerabilities while maintaining the performance enterprises demand.

Regulatory frameworks like GDPR, HIPAA, and PCI DSS don't exempt AI systems. Healthcare providers, financial institutions, and regulated enterprises face severe penalties if AI applications expose protected information.

Without proper governance, a single misconfigured AI agent could expose thousands of customer records, trigger regulatory investigations, and damage brand reputation irreparably.

Think of Invinsense Real MDR as your dedicated concierge for cybersecurity, compliance and remediation. Gain round-the-clock security with unmatched breadth and depth of coverage, as we handle exposures, fix & prioritize vulnerabilities and manage compliance, security validation and remediation on your behalf while keeping you informed every step of the way.

From its XDR that combines SIEM, SOAR, advanced deception, multi-signal threat intelligence and case management to its OXDR, which covers Exposure Assessment and Adversarial Exposure Validation, Invinsense Real MDR brings together powerful and purpose-built security stack and battle-ready security teams (Offensive strikers, DevSecOps & App Sec Engineers and Compliance experts) to deliver high-fidelity, faster and business-context driven security results.

Single integration point connecting enterprise applications and AI agents with multiple LLM providers—OpenAI, Anthropic, Google, Azure, and custom models.

Granular role-based permissions ensure the right users access appropriate AI capabilities while maintaining audit trails for compliance.

Automatically route requests to optimal providers based on security policies, cost parameters, and performance requirements.

Detects and blocks malicious prompts attempting to manipulate model behavior or extract unauthorized information.

Continuously assess AI models for known vulnerabilities, misconfigurations, and security weaknesses.

Test your AI defenses with automated adversarial attack scenarios in isolated lab environments.

Discover complete cybersecurity expertise you can trust and prove you made the right choice!